High-Efficiency Liquid-Cooled Thermal System Design for Data Centers

Introduction

1 Composition of the Data Center Cooling System

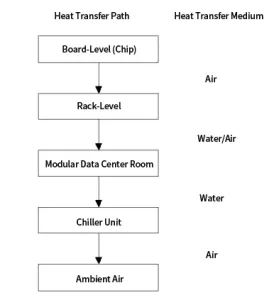

Most of the electrical energy consumed by IT equipment is converted into waste heat. To ensure that IT equipment operates normally at appropriate working temperatures, data centers are equipped with refrigeration and cooling systems including chillers, cooling towers, and precision air conditioners to discharge waste heat from the data center. The heat transfer process is shown in Figure 1. Among them, chillers, cooling towers, water pumps, and precision air conditioners are the key focuses for energy consumption.

- Heat Collection Side: High-efficiency heat sinks and precise air supply are used to transfer heat out.

- Precision Air Conditioning Side: The cooling system has evolved from room-level refrigeration to modular machine room and rack-level refrigeration, which is closer to the heat source and reduces energy consumption during refrigerant transportation.

- Cold Source Preparation Link: The system has shifted from air cooling to water cooling and natural cooling to improve external heat transfer efficiency.

2 End-to-End Liquid Cooling System Design

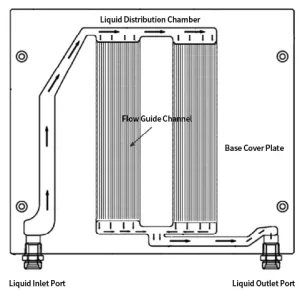

2.1 Board-Level Liquid Cooling Design

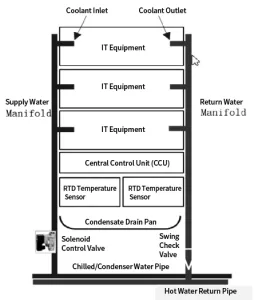

2.2 Rack-Level Liquid Cooling

leakage monitoring, and prevention of sudden major leaks.

- Production phase: Ensure reliable processes, conduct 100% pressure tests on cold plates, and use ultrasonic sampling for flaw detection; verify the effective number of insertions/extractions and long-term reliability of quick-plug connectors.

- Installation phase: Ensure secondary pipelines are thoroughly flushed before installation to prevent blockages in quick connectors, spring jams, or rubber ring failures caused by impurity particles, which could lead to leaks during operation. These measures primarily aim to minimize the occurrence of leaks.

- Water immersion sensor detection: Sensors are installed on water collection trays, which not only facilitate leak detection but also prevent liquid from spilling outside the rack to limit fault spread. While mature and reliable, this method can only detect leaks after the working fluid has accumulated in the tray through hardware boards and rack installations—by which point significant leakage may already have damaged boards and components.

- Real-time monitoring: Tracer substances with low boiling points are mixed into the working fluid. When a leak occurs, built-in gas sensors on the boards detect the tracer.

2.3 Machine Room-Level Liquid Cooling Design

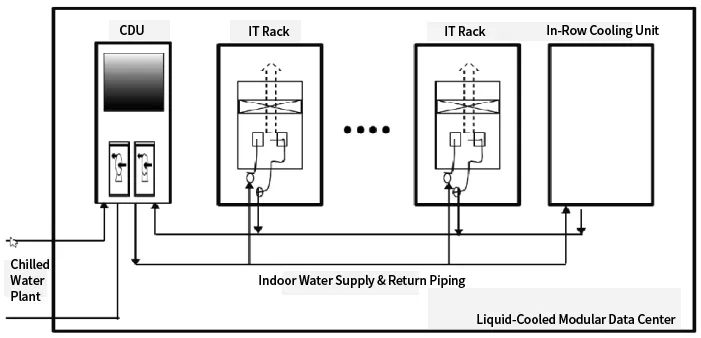

Machine room-level heat dissipation aims to transfer the heat emitted from racks to the outdoors. The machine room-level liquid cooling solution includes liquid-cooled modular machine rooms, chillers, water pumps, cooling towers, pipelines, etc., as shown in Figure 4.