Heat Sink Solutions:Development of Data Centers

| 2024 YE (H100 equivalent) | 2025 (GB200) | 2025 YE (H100 equivalent) | |

|---|---|---|---|

| MSFT | 750,000 – 900,000 | 800,000 – 1,000,000 | 2,500,000 – 3,100,000 |

| GOOG | 1,000,000 – 1,500,000 | 400,000 | 3,500,000 – 4,200,000 |

| META | 550,000 – 650,000 | 650,000 – 800,000 | 1,900,000 – 2,500,000 |

| AMZN | 250,000 – 400,000 | 360,000 | 1,300,000 – 1,600,000 |

| xAI | – 100,000 | 200,000 – 400,000 | 550,000 – 1,000,000 |

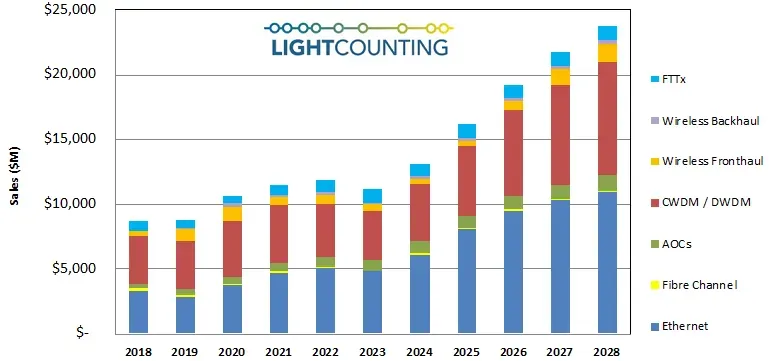

The rise of large – scale AI models has accelerated the adoption of high – speed data communication optical modules, especially in the telecommunications and data communication markets. As leading cloud service providers increase their investment in AI clusters, the demand for high – end optical communication has surged, leading to a shortage of components for 400G and 800G optical modules. LightCounting predicts that Ethernet optical module sales will increase by nearly 30% year – on – year in 2024, and growth will gradually resume in various market segments. After the global optical module market size declined by 6% year – on – year in 2023, the compound annual growth rate (CAGR) from 2024 – 2028 is expected to reach 16%. Coherent, a leading optical module company, stated that the global market size for AI – driven 800G, 1.6T, and 3.2T data communication optical modules may have a CAGR of over 40% in the five – year period from 2024 – 2028, growing from $600 million in 2023 to $4.2 billion in 2028.

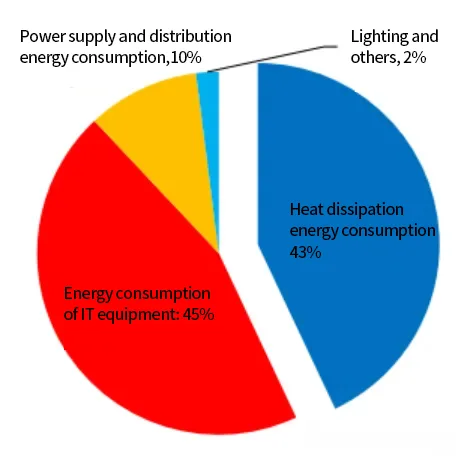

While consuming enormous amounts of electrical energy, data centers also generate significant energy consumption. Data center energy consumption is primarily composed of IT equipment energy consumption, cooling energy consumption, power supply and distribution energy consumption, and lighting and other energy consumption. Among these, IT equipment energy consumption and cooling energy consumption are the main components, with cooling energy consumption accounting for 43%.

>>>Heat Sink Solutions: Scaling for Data Centers and Chip Evolution

>>>Heat Sink Solutions:Development of Data Centers

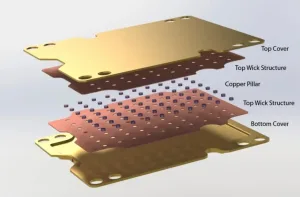

>>>Heat Sink Solutions:Vapor Chamber (VC) Cooling Technology

>>>Heat Sink Solutions:3D VC (Three-dimensional two-phase Homogeneous Temperature technology)

>>>Heat Sink Solutions:Liquid cooling heat dissipation technology

>>>Heat Sink Solutions:Other Cooling Technologies (Diamond and Graphene Cooling)

>>>Heat Sink Solutions:Heat Dissipation Path and Development Route of Thermal Interface Materials