Advancing Liquid Cooling Technology for High-Density Servers

Introduction:

With the continued evolution of high-density server technologies, liquid cooling has emerged as a crucial solution to meet the growing thermal challenges. In collaboration with Intel, Inspur Information has dedicated significant efforts toward optimizing liquid cooling for high-density general-purpose servers. Beyond the widely used CPU and GPU liquid cooling, we have expanded our research and development to cover high-power memory modules, SSDs, OCP network cards, PSUs, PCIe, and optical modules.

By leveraging cutting-edge liquid cooling technologies, we aim to provide a comprehensive solution for various industries such as the internet, communications, and more.

Product Integration and Cooling Solutions:

Our innovative liquid cooling solutions include specialized cooling components such as heat pipe heat sinks, liquid cold plates, and skived heat sinks, which are integral to managing heat dissipation across critical components. These solutions not only enhance server performance but also ensure system stability while meeting diverse user requirements.

Expansion of Liquid Cooling to High-Power Components

In addition to the widespread adoption of liquid cooling for CPUs and GPUs in the industry, there has been in-depth exploration and research into the liquid cooling of high-power memory, solid-state drives (SSDs), OCP network cards, PSU power supplies, PCIe, and optical modules. This has resulted in the creation of the industry’s highest liquid cooling coverage, meeting diverse deployment requirements for users across sectors such as internet services and telecommunications. It provides universal capabilities and diversified technical support to customers in these industries.

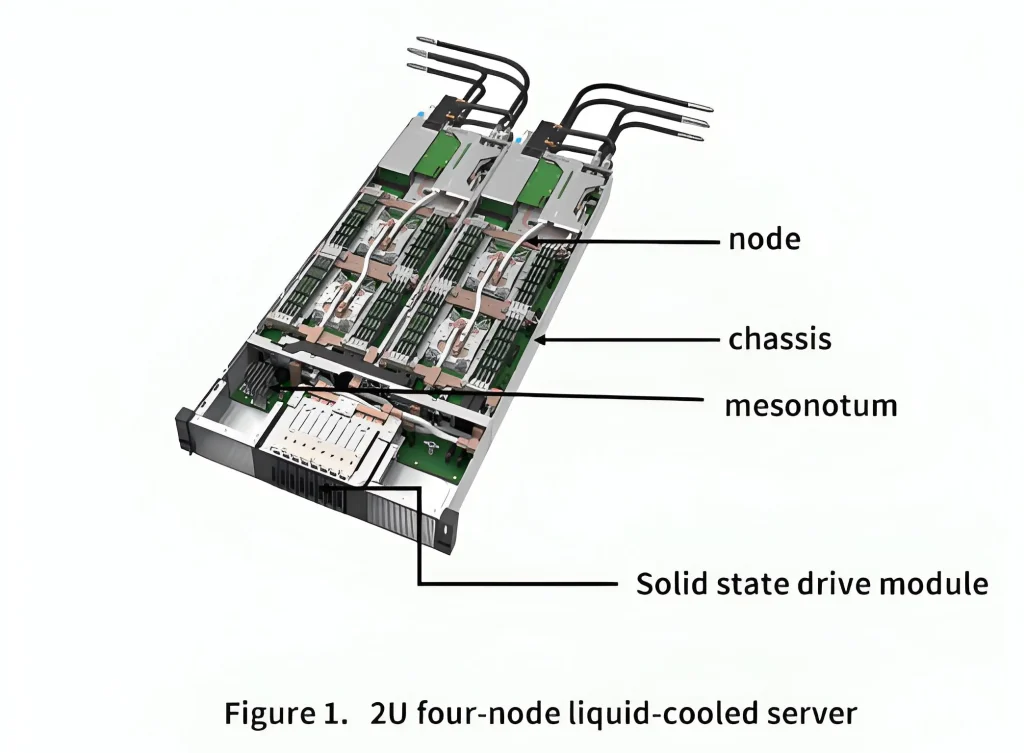

Inspur's High-Density Liquid-Cooled Server System

The development of this full liquid cooling cold plate system is based on Inspur’s 2U four-node high-density computing server i24. Each liquid-cooled node supports two Intel 5th Gen Xeon scalable processors, 16 DDR5 memory modules, one PCIe expansion card, and one OCP 3.0 network card. The system supports up to eight SSDs, providing high-density computing power while fulfilling storage needs. The primary heat-generating components in the server include the CPU, memory, I/O cards, local hard drives, and power supply unit (PSU).

Achieving Near-100% Liquid Cooling Efficiency

The liquid cooling solution achieves approximately 95% of the system’s heat dissipation through cold plates that directly transfer heat via liquid contact with the heat sources. The remaining 5% of the heat is dissipated through a rear-mounted air-liquid heat exchanger in the PSU, using coolant to carry the heat away, ensuring a near-100% liquid cooling heat capture rate at the system level.

1. System Composition and Flow Layout

1) Full Liquid Cooling Server Overview:

The 2U four-node full liquid cooling server system consists of nodes, chassis, middle/backplane, and SSD modules. The nodes and chassis components are connected via quick-connect fittings, power, and signal connectors for blind mating of water, power, and signal connections.

2) Full Liquid Cooling Server Node Overview:

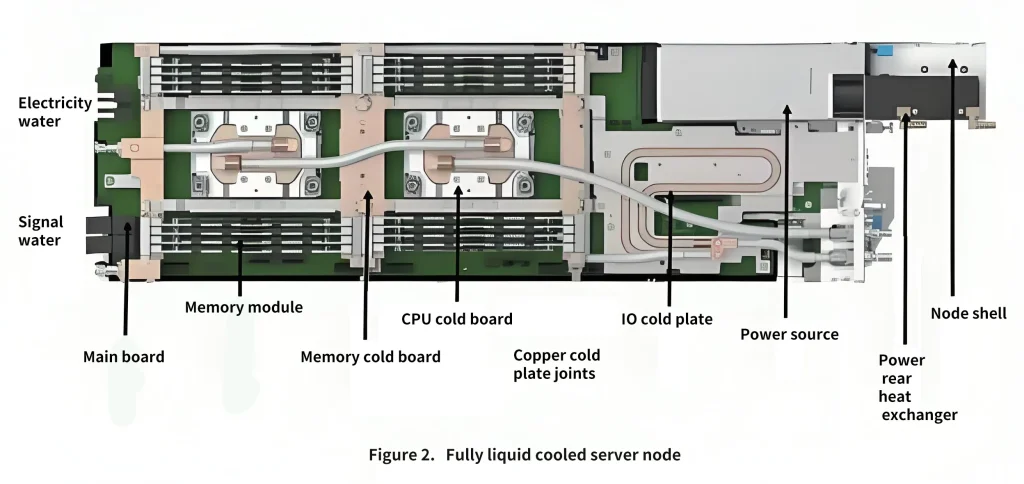

Each full liquid cooling server node includes the node shell, motherboard, CPU chips, memory modules, memory cold plates, CPU cold plates, I/O cold plates, PSU, and rear-mounted heat exchanger.

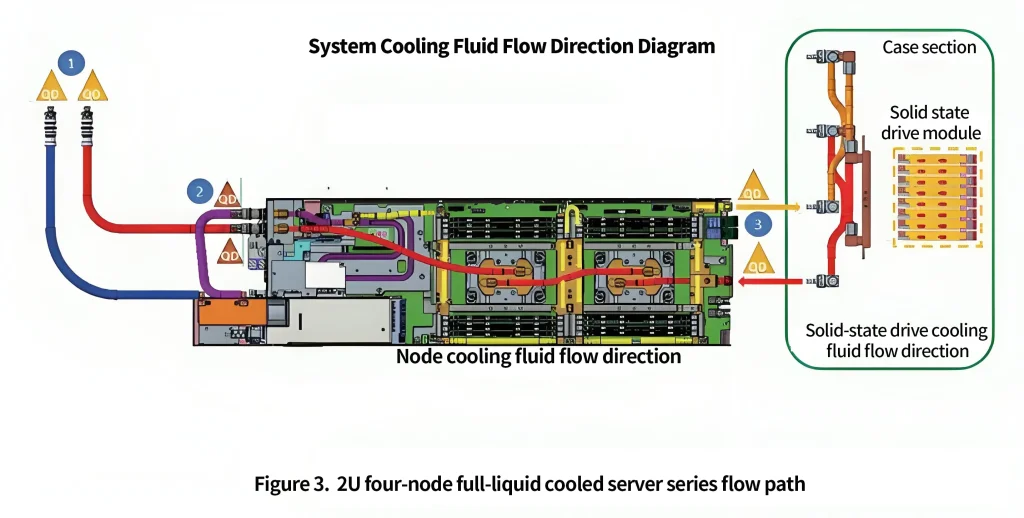

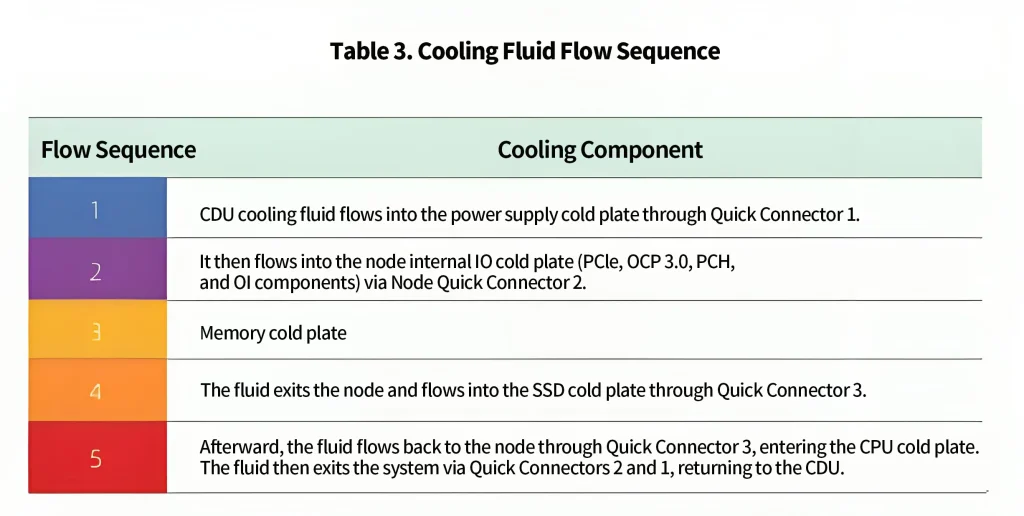

2. Flow Path Selection and Flow Rate Calculation

To simplify the design complexity of the flow path, the liquid cooling system adopts a series-flow design where the cooling fluid flows from low-power components to high-power components for heat dissipation. The flow directions are illustrated in the following diagram and table.

The flow rate of the full liquid cooling server must meet the thermal dissipation needs:

- To ensure the long-term reliability of secondary-side piping materials, the return water temperature on the secondary side should not exceed 65°C.

- To ensure the server components meet their thermal dissipation needs under defined boundary conditions, copper cold plates and PG25 coolant are selected for flow rate design analysis.

To meet the requirement of a return water temperature not exceeding 65°C, the minimum flow rate for a single node (PG25) is calculated using the following formula:

Qmin = Psys / (ρ * C * ΔT) ≈ 1.3 (LPM)

3. Key Components of the Full Liquid Cooling Server Cold Plates

1) Full Liquid Cooling Server Overview:

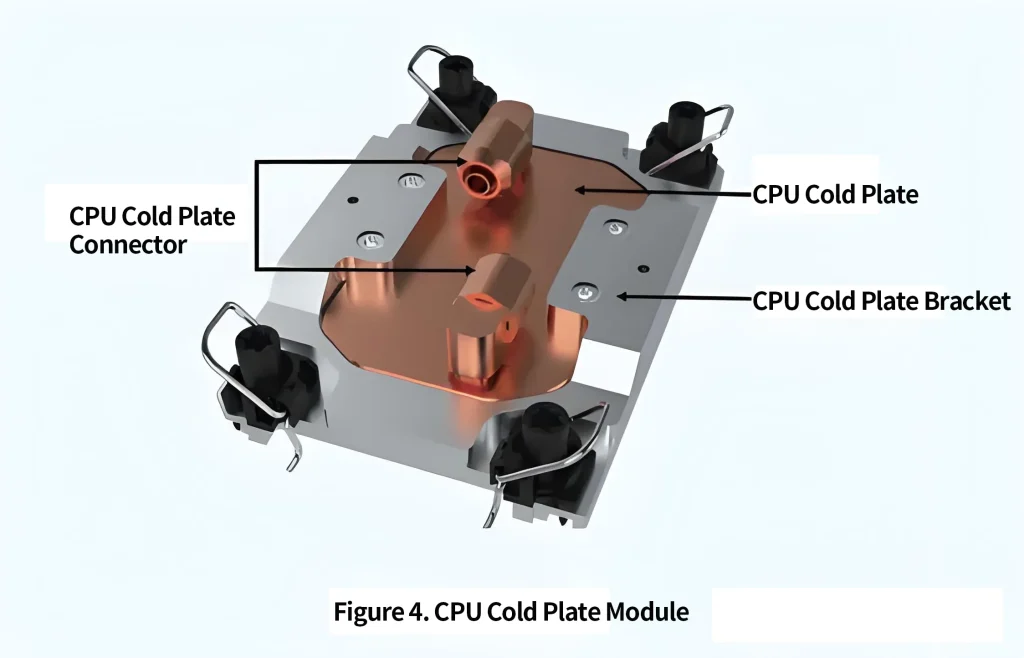

The CPU cold plate is designed for Intel’s 5th Gen Xeon Scalable processors, optimizing heat dissipation, structural performance, yield, cost, and compatibility with different materials. The CPU cold plate consists of an aluminum bracket, CPU cold plate, and cold plate connectors.

2) Memory Liquid Cooling Design:

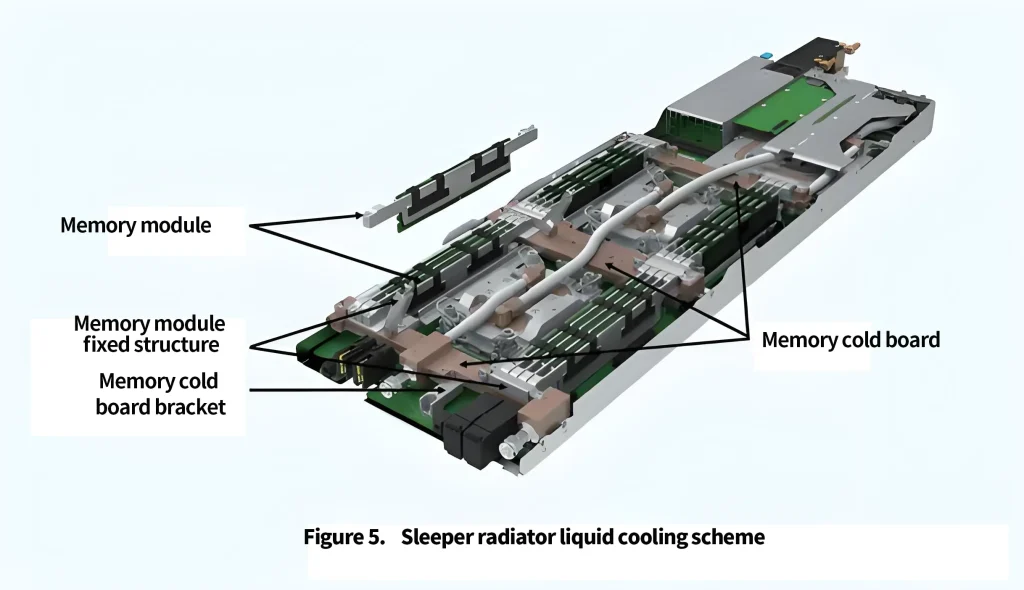

The memory liquid cooling design uses an innovative sleeper rail heat sink solution, named for its resemblance to railroad ties. This solution combines traditional air cooling and cold plate cooling, utilizing heat pipes (or pure aluminum/copper plates, Vapor Chambers, etc.) to transfer heat from the memory to the cold plates. The cold plates then carry the heat away via the coolant.

Memory and heat sinks can be assembled externally using jigs to form a minimal maintenance unit (referred to as the memory module). The memory cold plate includes fixed structures to ensure good contact between the memory module and the cold plate, which can be secured with screws or without tools for easy maintenance.

Compared to existing tubing-based memory liquid cooling solutions, the sleeper rail heat sink offers several advantages:

- Ease of Maintenance: Unlike traditional solutions, the memory module can be serviced without removing the heat sink, greatly improving assembly efficiency and reliability.

- Versatility: It supports different memory chip thicknesses and spacings without compromising heat dissipation, offering a standard solution that’s reusable and adaptable.

- Cost-Effectiveness: The heat sink can be tailored to the memory power requirements, optimizing cost, and can dissipate up to 30W or more with a 7.5mm memory spacing.

- Ease of Manufacturing and Assembly: No liquid cooling tubing is required, making it compatible with standard manufacturing processes used for air-cooled solutions.

- Reliability: It minimizes the risk of damaging memory chips or thermal pads during assembly and ensures long-term reliable performance, even with multiple insertions and removals.

3) SSD Liquid Cooling Design:

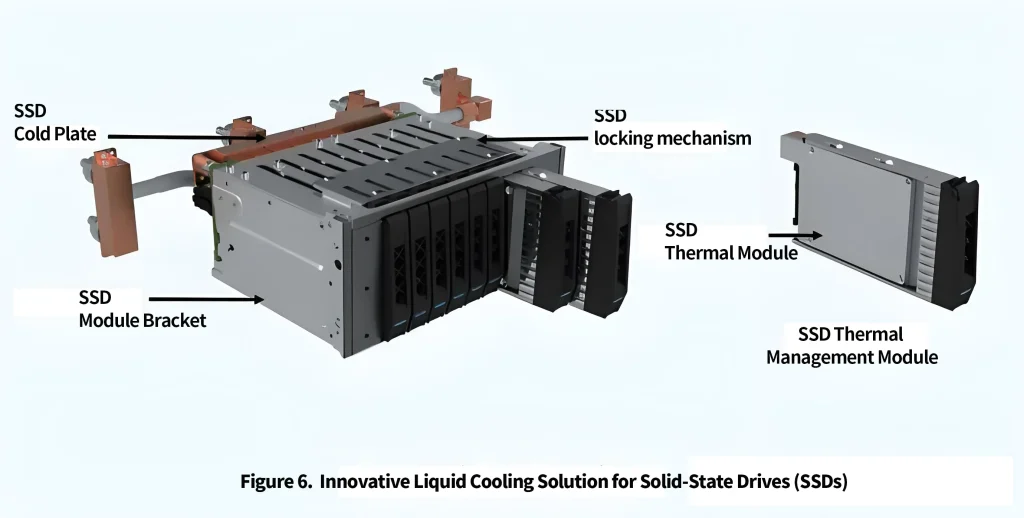

The innovative SSD liquid cooling solution uses heat pipes to transfer heat from the SSD area to a cold plate via thermal interface materials. The SSD liquid cooling system consists of an SSD module with a heat sink, SSD cold plate, SSD module locking mechanism, and SSD bracket.

This SSD cooling solution offers several advantages over existing attempts:

- Supports over 30 hot-swap operations without power interruptions.

- No risk of damaging thermal interface materials during installation.

- Lower manufacturing complexity, using traditional air cooling and CPU cold plate processes.

- No water within the SSDs, allowing multiple drives to share a single cold plate and reducing leakage risks.

- Flexible Adaptation: Supports different SSD thicknesses and quantities.

4) PCIe/OCP Card Liquid Cooling Design:

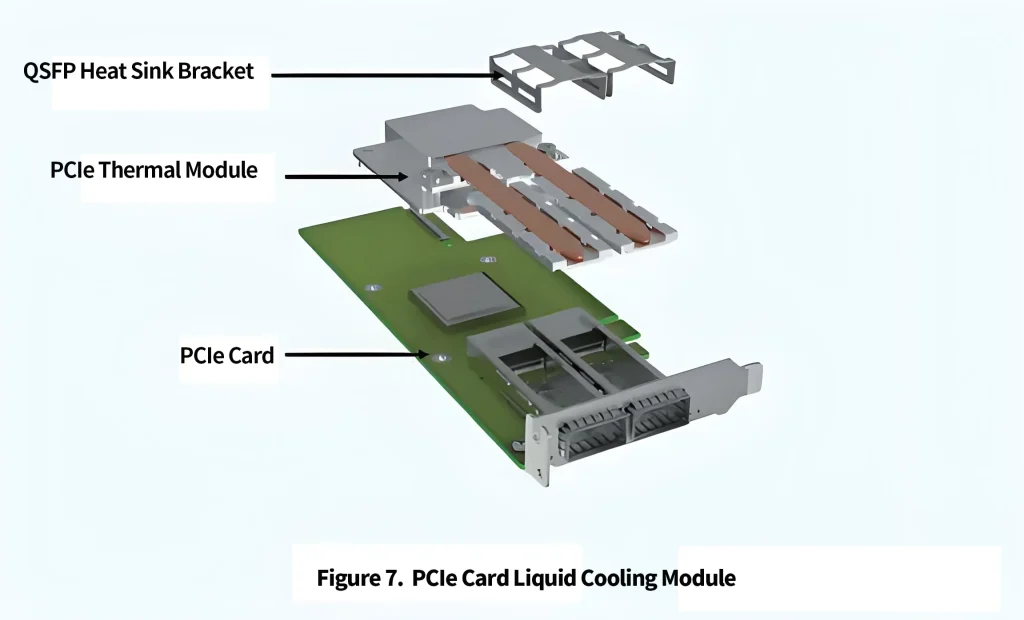

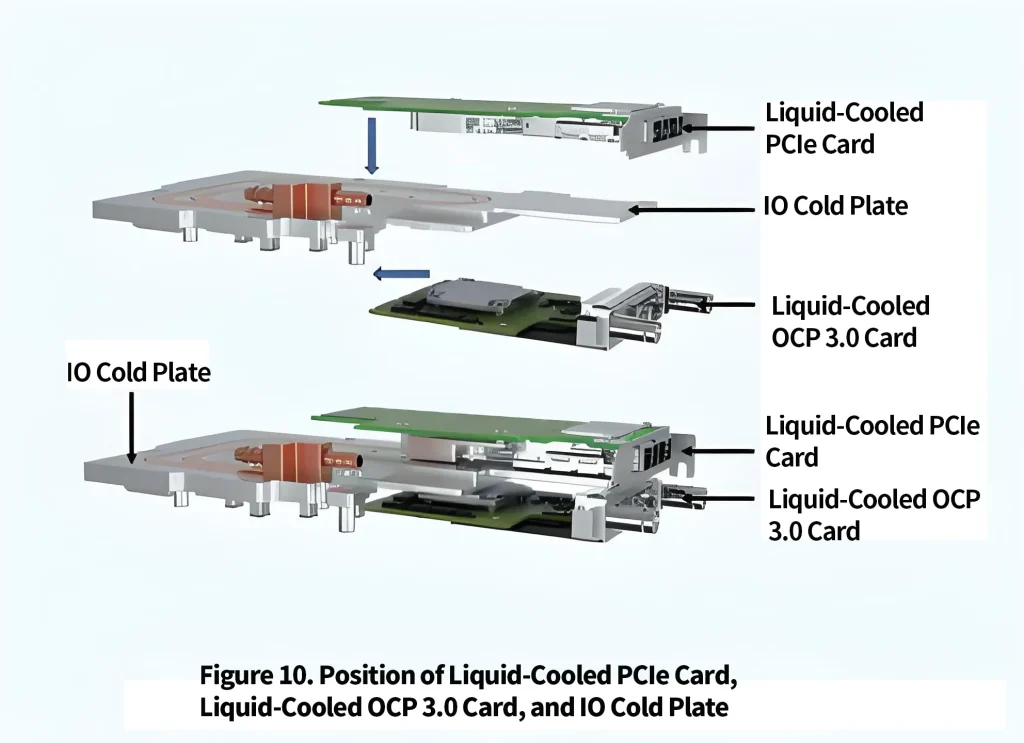

1.1 PCIe Liquid Cooling Solution

This solution adapts a PCIe card to fit a cold plate for heat dissipation. Heat from optical modules and PCIe chipsets is transferred through heat pipes to a cooling module and ultimately to the I/O cold plate via thermal interface materials.

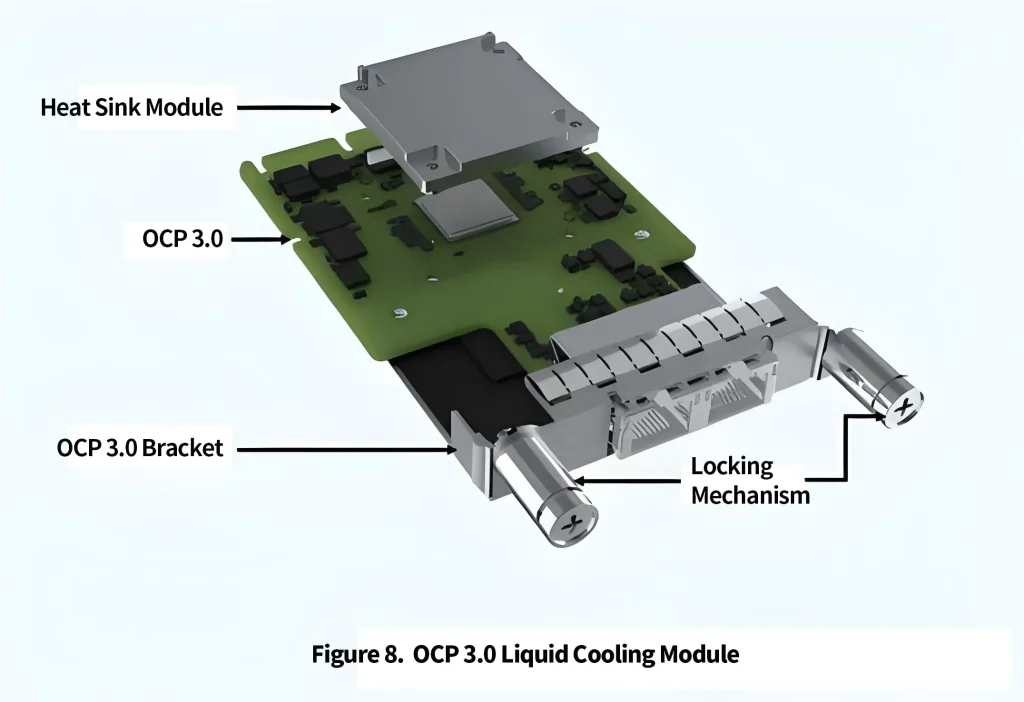

1.2 OCP 3.0 Liquid Cooling Solution

Similar to PCIe cards, a liquid cooler is customized for OCP 3.0 cards to transfer heat from the chips to a liquid cooling heat sink, which then exchanges heat with the I/O cold plate.

Both PCIe and OCP solutions are designed with locking mechanisms to ensure long-term contact reliability and to facilitate maintenance.

Both PCIe and OCP solutions are designed with locking mechanisms to ensure long-term contact reliability and to facilitate maintenance.

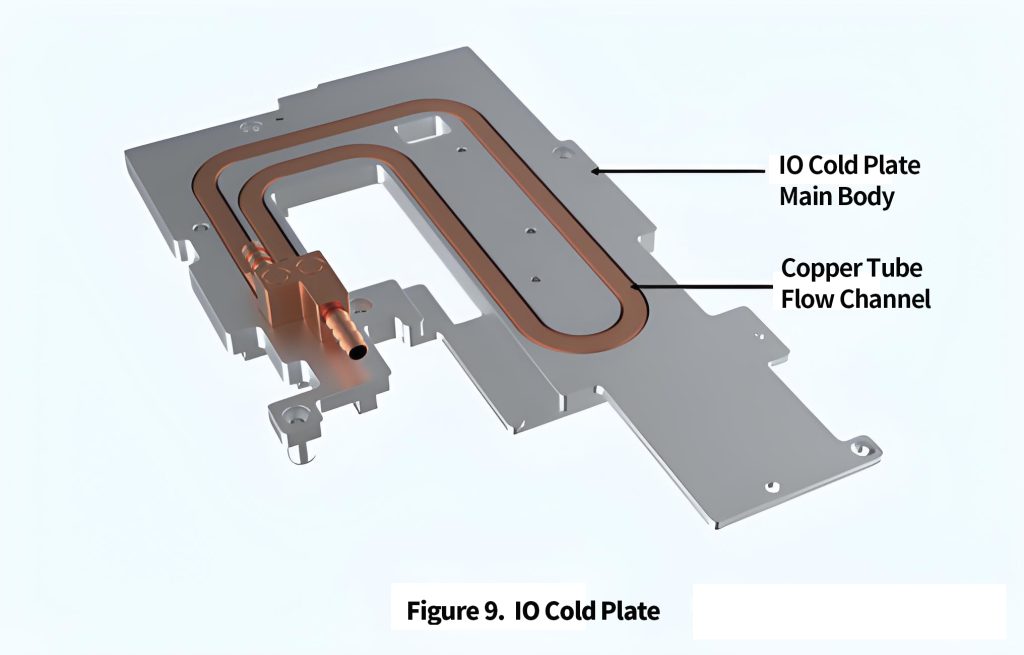

1.3 IO Cold Plate Solution

The IO cold plate is a multifunctional cooling solution designed not only to dissipate heat from the motherboard’s IO area but also to cool liquid-cooled PCIe cards and liquid-cooled OCP 3.0 cards.

The cold plate consists of the main body and copper tube channels. The main body is made from aluminum alloy, while the copper tubes facilitate the cooling fluid’s flow and enhance heat dissipation. The design must be optimized based on the motherboard layout and specific thermal management requirements of the components. The cooling modules on the liquid-cooled PCIe and OCP 3.0 cards make contact with the IO cold plate along the specified direction. The selection of materials for the cooling fluid channels should ensure compatibility with the system’s coolant and immersion materials. This liquid cooling solution for the IO cold plate addresses the multi-dimensional assembly needs of various components.

The combination of copper and aluminum materials solves compatibility issues, ensuring optimal heat dissipation while reducing the cold plate’s weight by 60% and cutting costs.

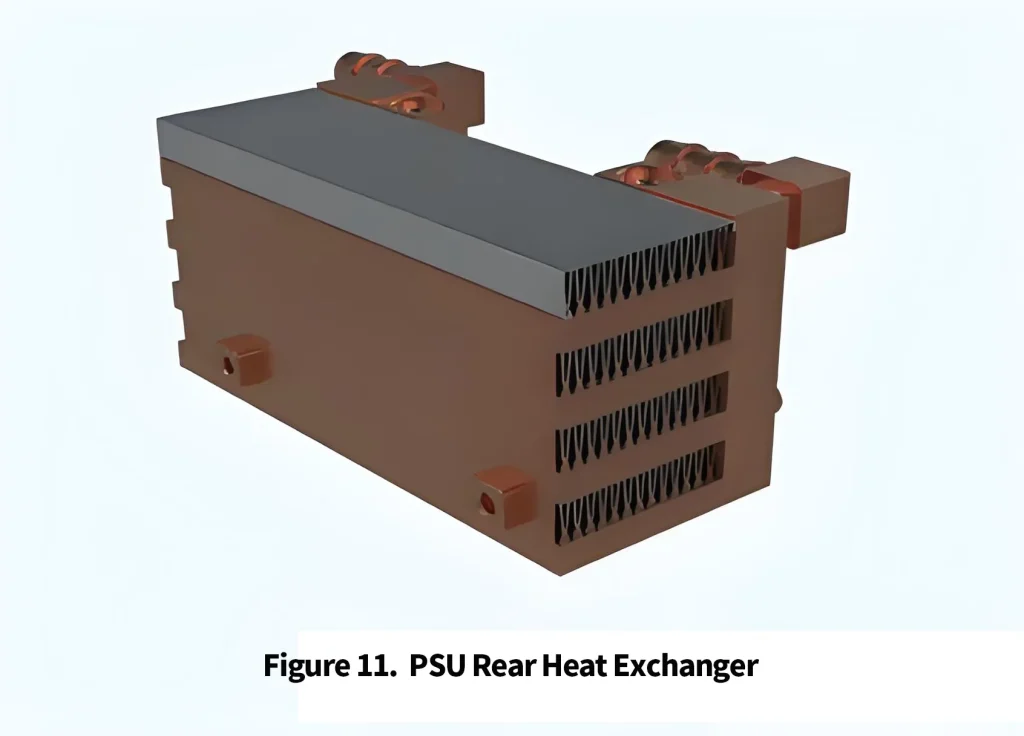

5) PSU Cold Plate Design:

The PSU liquid cooling solution uses an external air-liquid heat exchanger to cool the heat from the PSU fans, minimizing the system’s impact on the data center environment.

The rear-mounted PSU heat exchanger features a multi-layer structure, with cooling channels and finned surfaces. The design ensures efficient heat dissipation while balancing system space and cost.

This innovative PSU liquid cooling solution reduces the need for custom liquid-cooled power supplies, cutting development costs by over 60%. It can flexibly adapt to various PSU designs without requiring major modifications, ensuring cost savings.

For full cabinet applications, the solution also includes a centralized air-liquid heat exchanger, capable of supporting up to 150 nodes while significantly reducing costs compared to individual heat exchangers for each PSU.

By utilizing this centralized solution, the system can efficiently manage heat within the cabinet, maintaining a stable internal environment without affecting the data center. This approach embodies the concept of “Rack as a Computer”.